1 Introduction

This article refers to the address: http://

Image matching technology is an important research in the field of digital image processing, and has been widely used in computer vision, virtual reality scene generation, aerospace remote sensing measurement, medical image analysis, optical and radar tracking, and landscape guidance. It involves many related fields, such as image preprocessing, image sampling, image segmentation, feature extraction, etc., and tightly combines computer vision, multidimensional signals, and numerical calculation methods. Therefore, how to find an effective image matching method, so that it can quickly and accurately find the required image information from a large amount of information becomes an urgent problem to be solved.

The feature method [1] is a classical method in image matching algorithm. The principle is to select typical features in the image as the basic unit of motion estimation. This method is close to human visual characteristics and is mainly subject to the stability of feature extraction. The accuracy of the positioning of features and features. The SIFT (scale in-variant feature transform) feature [2, 3] is an image local feature that is invariant to scale, rotation, brightness, affine, noise, and so on. Therefore, in view of the requirements of video frame matching, combined with the advantages of SIFT features invariance to scale and rotation, the Euclidean distance discrimination and RANSAC method are combined into the matching calculation of SIFT feature points, and a high precision is obtained through research. Video interframe matching algorithm.

2. Video inter-frame matching based on SIFT features

In video inter-frame images that need to be processed, rotation and scaling are commonly present, while the commonly used Harris corners [4] and KL corners [5] are not suitable for this application. The SIFT feature is an image local feature extracted from the DOG scale-space of two frames of images. Taking an aerial video captured on a helicopter as an example, the SIFT feature points of two images in the video are taken. The matching is performed, and the simulation results are shown in Fig. 1.

Figure 1 SIFT feature matching results of two images in the video

The simulation results show that the SIFT features not only have the invariance to rotation, scale scaling and brightness variation, but also have a certain degree of stability to the viewing angle change, affine transformation and noise. Since the algorithm does not take a single pixel as a research object, the adaptability to local deformation of the image is improved.

However, in the traditional process of matching feature points only by the Euclidean distance discriminant method, there is still a mismatch problem. Figure 2 shows that the feature points extracted from the adjacent two frames are displayed in the same frame image. The mismatch points can be divided into two categories according to their properties: the first type is the point of the perfect match error, as shown in Figure 2 (a ), that is, two points on the paired two-frame image are not actually a pair of points with matching relationship, they have the same or very similar SIFT feature vectors, but they are not the same image feature; the second type Is the matching point with error, as shown in box (b) of Figure 2, that is, although the two points are the same image feature, due to the shaking of the lens, the local motion of the image and other disturbances, the same point is in two The difference in coordinates in the frame image is significantly different from the difference in coordinates of most other points. Both of these conditions affect the accuracy of the motion parameter estimates and are therefore mismatched points that need to be removed.

Figure 2 Euclidean distance method to identify matching points

Experiments show that the matching accuracy of key point matching using Euclidean distance depends on the size of the proportional threshold r. When r takes too large, a large number of mismatch points appear, and the number of matching points that r is too small may be too small. However, there is a case where if the Euclidean distance value of the feature vectors of two different feature points on the two frames of images is very small, the threshold r needs to take a sufficiently small value to remove the pair of erroneous matching points. In this way, the total number of matching points is too small or even zero, and even the subsequent parameter calculation cannot be performed. Therefore, it is impossible to solve the above mismatching point problem simply by adjusting the proportional threshold r in the Euclidean distance matching, and it is difficult to achieve a high-precision, moderately-numbered feature point matching result.

3. Improvement of matching criteria

The difference between the RANSAC method and the traditional method is that the traditional method first calculates the initial parameter values ​​by taking all the data points as internal points, and then recalculating and counting the inner and outer points; while the RANSAC method starts with a part of the data as the inner The point gets the initial value and then looks for all other interior points in the data set. That is to say, the RANSAC method is used to verify the accuracy of the feature points of the Euclidean distance rough matching, which can minimize the influence of noise and external points. Therefore, in this paper, the Euclidean distance of the key point feature vector is used to determine the similarity of the feature points of two images in the video, and the rough matching is performed. Then the RANSAC method is used to iteratively calculate the rough matching result [7,8], through the second precision. Matching to eliminate mismatch points in the rough match, to get an exact match point, and get accurate image matching results.

3.1 A rough match of the Euclidean distance method

After the SIFT feature vector of the two frames is generated, the Euclidean distance of the key feature vector is first used as the similarity determination metric of the key points in the two frames. The Euclidean distance is a commonly used distance definition, which is the true distance between two points in an n-dimensional space.

The formula for calculating the Euclidean distance of feature points in two frames of images is:

![]() (1)

(1)

Xi1 represents the i-th dimension of a point on the image of the first frame, and Xi2 represents the i-th dimension of a point on the image of the second frame.

Judgment criterion: take a key point in image 1 and calculate the first two key points in image 2 that are closest to its Euclidean distance. In these two key points, if the nearest distance d1 is divided by the next closest distance d2 At a certain ratio threshold r, the pair is accepted, otherwise it is discarded.

3.2 The second exact match of the RANSAC method

The basic idea of ​​the RANSAC (Random Sampling Consensus) method [6] is to treat all available input data indiscriminately when performing parameter estimation, but first to design an objective function for a specific problem, and then iteratively estimate the function. The parameter values, using these initial parameter values, divide all the data into so-called "inners" (ie, points that satisfy the estimated parameters) and "outliers" (points that do not satisfy the estimated parameters), and finally Come over and recalculate and estimate the parameters of the function with all the "inside points".

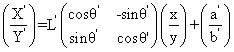

Global motions herein include scale transformations, rotational transformations, and translation transformations. If X and Y are used to represent the position coordinates of any one of the feature points in one frame, x and y are the position coordinates of any one of the other frame images, L is the scale transformation between the two frames, and θ is the rotation. Transform, a and b represent translation transformations. Then, the calculation of the global motion parameters can be expressed as a similar transformation as follows: ![]() (2)

(2)

Eight pairs are selected from the coarse matching points of the two frames, and their coordinate information is used to form a system of equations to calculate the motion parameters. ![]() Using parameters

Using parameters ![]() Convert all the points in the second frame image to the first frame image, and the inverse transformed point coordinates are represented by X', Y', then:

Convert all the points in the second frame image to the first frame image, and the inverse transformed point coordinates are represented by X', Y', then:

(3)

(3)

Judgment criteria: If a point is transformed into the image of the first frame, it can coincide with or substantially coincide with its corresponding point on the image of the first frame (the displacement value is less than 1 pixel), namely:

![]() (4)

(4)

Then they are a pair of corresponding points determined by the transformation. If the ratio of the number of corresponding points to the total number of points in the region reaches a certain threshold (manually selected), then the set of transformation relationships is considered acceptable. The motion parameters are re-solved by the least squares method using all the corresponding points determined by the transformation, that is, the inner points, and the matching point test ends, and is not calculated downward. If the corresponding point ratio cannot meet the requirements, then a set of points is re-selected, and then the corresponding motion parameters are calculated. If the ratio of the corresponding points does not reach the set threshold for the motion parameters obtained for each group, it is determined that there is no matching relationship between the two frame pictures.

4. Experimental results and analysis

In the experiment, the video image size is 884×662, the video frame rate is 25fps, the computer is configured as AMD Athlon(tm) 64×2 Dual Core Processor 5000+, and the memory is 2.00GB. Fig. 3 is a matching point obtained by a rough matching of the Euclidean distance discrimination method, wherein (a) and (b) are matching points obtained in two frames of images, and (c) represents point displacement of the feature points in two frames of images. It can be seen that most of the point displacements are minute, so the long line in (c) indicates that the point displacement value is large, and the matching point is proved to be a mismatch point.

(a) (b) (c)

Figure 3: A rough matching result of the Euclidean distance discrimination method

Figure 4 shows the matching result obtained by the second exact matching of the RANSAC method. It is obtained by 4(c). After the second matching, the mismatched point in the rough matching and the matching point due to the local motion are removed. , got a completely correct match. Table 1 shows the results of SIFT feature matching between video inter-frame images using the traditional Euclidean distance discrimination algorithm and the Euclidean distance-RANSAKC quadratic matching method.

(a) (b) (c)

Figure 4 Match results obtained by RANSAC method quadratic matching test

Table 1 Comparison of image feature matching results between video frames based on Euclidean distance method and RANSAC quadratic matching method

After processing the 430-frame image of the video, the average correct matching rate obtained by the Euclidean distance matching algorithm is 96.2%, and the average peak signal-to-noise ratio is 21.8541dB. The number of frames with a single correct matching rate of 100% is 8 frames, and the average correct matching ratio of the Euclidean distance-RANSAC two-matching method used in this paper is 98.8%, the average peak signal-to-noise ratio is 31.2271dB, and the number of frames with a single correct matching rate of 100% is 349 frames, which is effectively reduced. According to the peak signal-to-noise ratio (PSNR) value, it can be seen that the matching accuracy of the image between video frames is significantly improved.

5 Conclusion

In this paper, the problem of image feature extraction and matching between video frames is studied. The feature point similarity of two frames in the Euclidean distance video of the key point SIFT feature vector is used to roughly match, and the second exact matching algorithm is adopted by RANSAC method. Limit the effects of noise and external points to solve the problem of mismatched points in rough matching. The simulation results show that the proposed algorithm can effectively solve the mismatch problem when only the Euclidean distance discriminant method is used for matching, which significantly improves the matching accuracy of video frames. At the same time, the simulation results show that the algorithm has strong robustness. .

LED Flood Light 20W,LED Flood Light 10W,LED Flood Light 50W

LED Bulbs & Tubes Co., Ltd. , http://www.nsledlight.com